Unikernels

Purpose

Unikernels are specialized, single-purpose operating systems designed to run directly on a hypervisor. They are compiled from high-level source code into a standalone kernel that includes only the necessary components to run a specific application.

The main purpose of unikernels is to deploy applications more efficiently and securely, compared to traditional operating systems.

They are sealed and immutable at compile-time, reducing the attack surface and preventing unauthorized modifications.

And due to their specialized nature, unikernels often exhibit better performance for their specific tasks, eliminating unnecessary general-purpose OS overhead (there is no such thing as “syscall” at all).

It doesn’t have stuff that is not being used by application at runtime: like shell, mouse or floppy-disk driver (current Debian kernels still provide it, lol), etc.

Also, need to remember, that unikernels usually not intended to be run directly on bare-metal: it relies on the hypervisor to provide essential services like device drivers and memory management through consistent interface regardless of underlying hardware, and it needs specific bootloaders (either hypervisor’s one or own minimal bootloader) which initializes VM environment, sets up address space and allocates memory pages to prepare unikernel to be executed.

You may ask: if unikernels are so awesome, why not everybody using them?

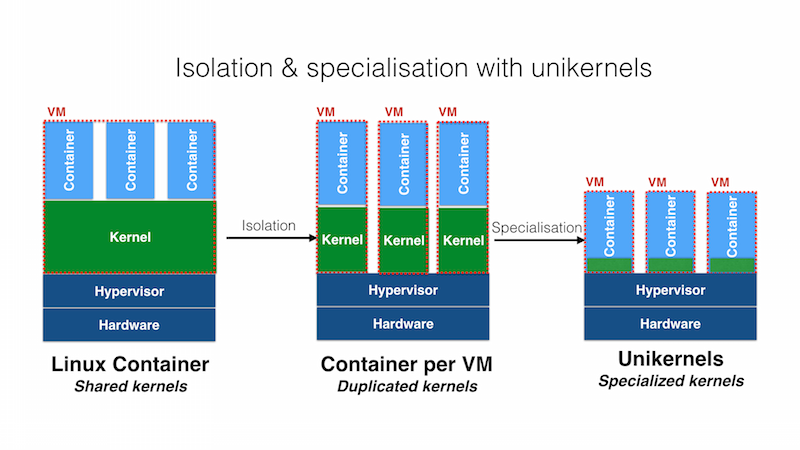

Let’s first look at the following visualization of virtualization approaches:

- Containerization (e.g., Docker): Containers package applications and their dependencies in isolated environments that share the host OS kernel. Containers offer portability, quick startup times, and efficient resource utilization.

- Traditional Virtual Machines (VMs): VMs run full operating systems and provide strong isolation between applications. They are more heavyweight than unikernels but support a wide range of applications and legacy software.

- Unikernels: Unikernels compile applications and their necessary libraries into a single bootable image that runs directly on a hypervisor. They offer minimal resource consumption, fast boot times, and enhanced security, tailored for specific, single-purpose applications.

There always should be a trade-off based on business needs and engineering complexity. Even though unikernels can consume very little resources, so it may be the cheapest option to serve, I think, that the cost of rewriting, establishing new way of delivery and supporting your software to be build as unikernel can cost significant amount of money. So unikernel in not a silver bullet.

But if performance and/or security is a key differentiator for your product, like it’s game-server or a simple but high-load backend API - it make sense to try unikernels.

One of the benefits could be the fact that with unikernels, startup/reload time could be the same order of magnitude of time to handle a request by you application - imagine 1-50ms total boot time! Examples could be: on-the-fly updates in realtime apps or serverless apps lifecycle management.

And I think that in a couple years from now, unikernels can really catch up containers in popularity, especially for more high-load/high-secure applications.

How it works

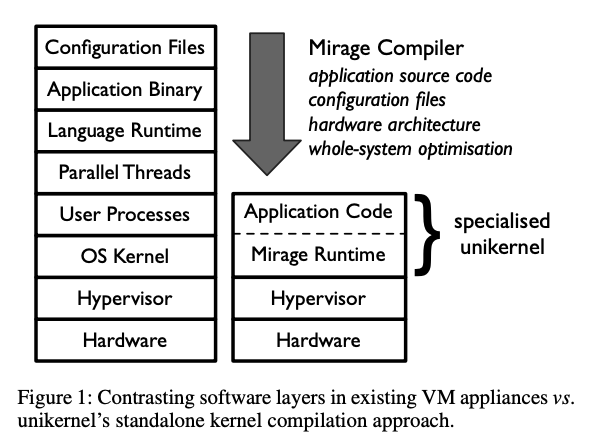

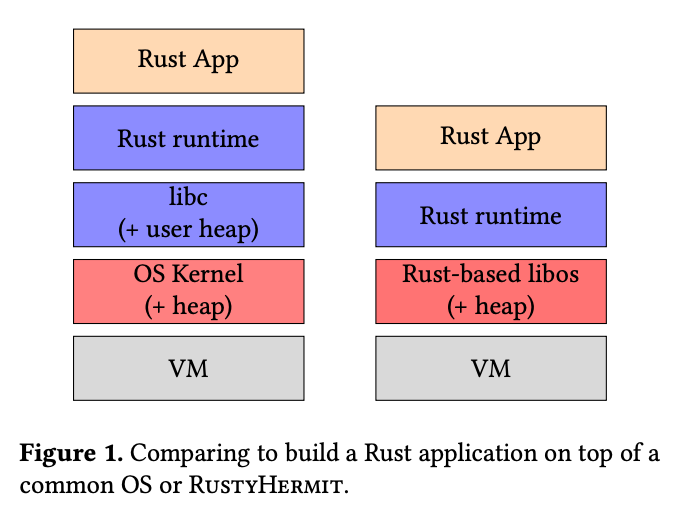

Here is a nice picture showing software layers comparison, from one of the most well-known papers on unikernels:

As you see, in the regular VM setup, actual application binary split from the hypervisor with lots of abstraction layers. It’s done on purpose, since having kernel/user space separation, processes, lots of hardware-specific libraries, etc. - gives us ability to run quite complex applications regardless the language runtime and resources usage. Imagine average ML application which runs python, needs lots of threads and RAM, GPUs, shared cpp libraries along side with rust ones managed through FFI and the whole web stack to host jupyter notebook ;)

In case of unikernels, right on top of the hypervisor level (e.g. Xen), goes the application itself, sealed with runtime (Mirage in the case of paper).

Instead of a full OS, the unikernel directly compiles application code along with the necessary system libraries and drivers into a single executable binary. This includes:

- Application Source Code: The code for the specific application, written in a high-level language (e.g., OCaml in the MirageOS case).

- Configuration Files: Configuration parameters needed by the application, integrated directly into the binary at compile time.

- System Libraries and Drivers: Essential libraries and drivers that are linked with the application code.

The end artifact after compilation would be the unikernel binary. Depending on the deployment environment it could be:

- ELF (Executable and Linkable Format): it’s a common binary format fro Unix operating systems, also being used for Xen hypervisor.

- Raw Binary: a straightforward sequence of machine instructions. Can be used with KVM.

- Bootable Disk Images: Such as ISO or QCOW2, suitable for environments like KVM, which emulate hardware for running the unikernel.

- PE (Portable Executable): Used for Windows environments and compatible hypervisors like Hyper-V.

After the compilation stage, the unikernel binary being loaded into hypervisor via bootloader. Usually, unikernels compiled in a way that they “know” about which hypervisor it will be run on - there is a special kind of virtualization used there, usually reffered as paravirtualization. Such apps should be run with special bootloader, which itself is a PV kernel which behaves as a bootlaoder.

Runtime

Runtime is a specialized environment tailored for running on a hypervisor. It replaces the traditional OS kernel, providing essential services such as memory management, scheduling, and I/O operations. The unikernel integrates the language runtime (e.g., OCaml runtime for MirageOS) and necessary protocol libraries (e.g., TCP/IP stack, file systems) compiled directly with the application code.

Memory

Unlike traditional OS with multiple address spaces, unikernels use a single address space, reducing context-switching overhead and improving performance.

Here is an example of typical memory layout in operating systems:

At this case, each process isolated from the others and the kernel with it’s own virtual memory.

Instead, in unikernels, there is no such thing as “process” at all, as well as “user/kernel space”. Only a single address space, where all code and data share the same memory address range.

Multithreading

In Mirage (as we’re still referring to this paper), since it’s written in OCaml, threads are being managed by lwt. It implements cooperative multitasking, with an option to create preemptive threads (it maintains a pool of system threads inside).

So it’s basically depends on which kind of runtime is being used for the concrete unikernel implementation.

Popular unikernel implementations

Mirage OS

Mirage I think is one of the first stable implementations of unikernels with a very nice paper (absolute worth reading). It produces unikernels by compiling and linking OCaml code into a bootable Xen VM image.

In case of Xen, it uses pv bootloader, and Solo5 for KVM.

Here you can find some examples of web-apps.

The downside is that it’s all in OCaml and you have to implement your apps in OCaml as well. I really don’t know people who are building anything in OCaml, but community is alive though ;)

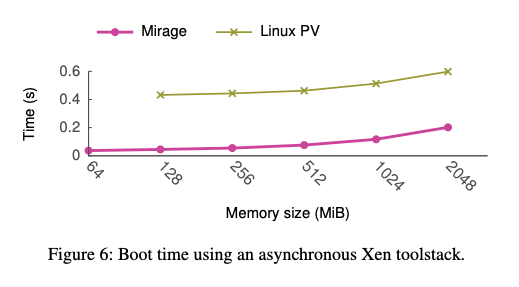

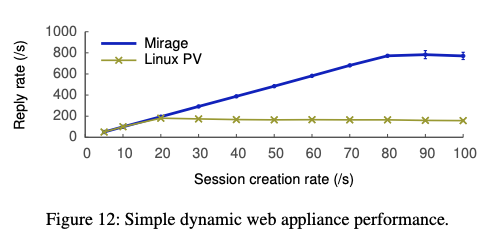

In the paper, they provided nice analysis of the performance:

- Boot times against the size of bootable image:

- Dynamic web performance - simulating simple twitter-like app (the plateau there means the task becomes CPU-bound):

As expected, unikernel approach has significant advantage in both startup time and amount of RPS handled by the web app.

Hermit OS

Hermit is another implementation of unikernels, initially started as C project, and now re-implemented in Rust.

It’s more flexible comparing to Mirage, since we now can implement apps in C/C++/Go/Fortran.

In case of Rust, they have special crate.

Hermit uses it’s own loader to load in QEMU/KVM. Authors even implemented their own lightweight hypervisor uhyve (100% Rust).

That’s pretty nice that developers basically can end-to-end rely on Hermit OS ecosystem and use Rust all the way.

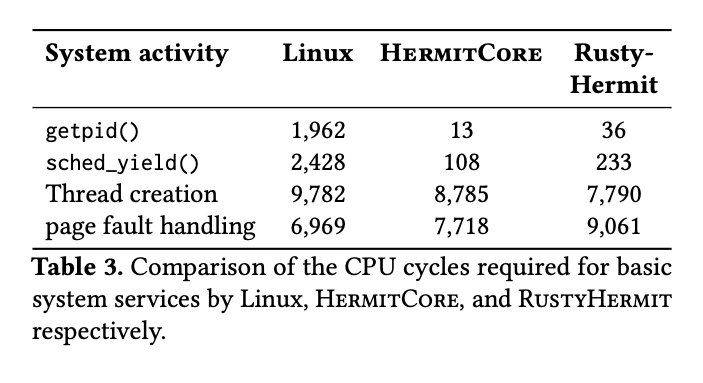

Authors also released a RustyHermit paper, where they’ve compared Linux, HermitCore and RustyHermit.

Here we can see software layers comparison between the regular Rust app and RustyHermit unikernel:

And here is a quite interesting table showing difference of CPU cycles for certain small operations:

The overhead of unikernels is clearly smaller because the system calls are mapped to common functions.

Rust wins in thread creation, but ~1300 cycles slower for page fault handling task (accessing unmapped memory page), which most probably caused by memory safety guarantees logic.

Unicraft

The last example would be Unicraft. It’s implemented in C and supports C/C++/JS/Go/Python/Rust - check examples catalog.

They have very nice docs and a toolchain, where their kraft files and kraft cli is very similar to docker from the user perspective.

One of the benefits also is that authors created unikernel images packaging system using the OCI Image Specification.

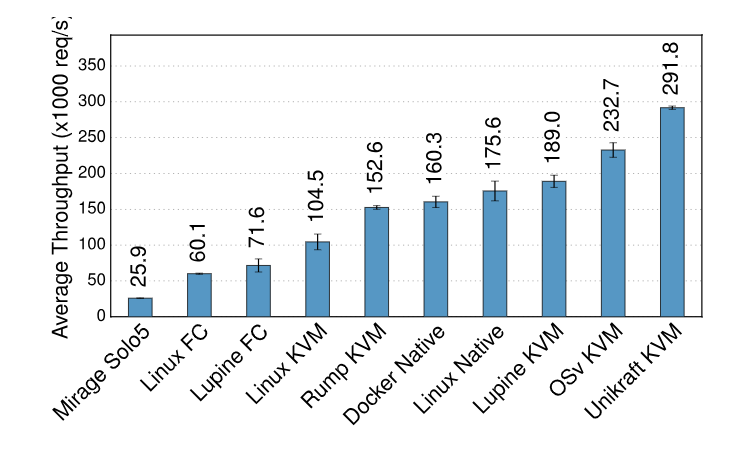

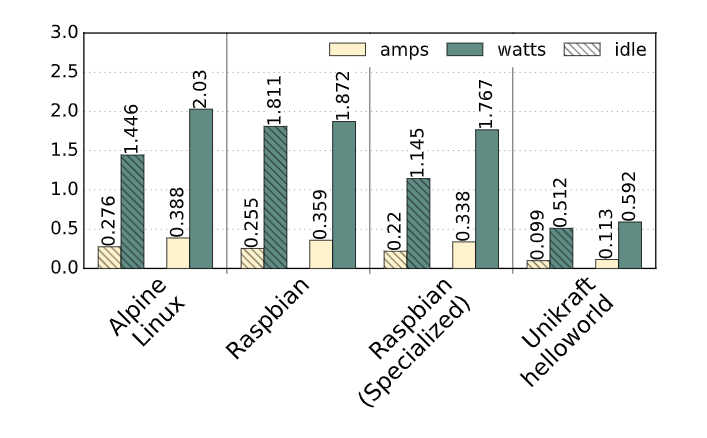

Here are some interesting performance numbers:

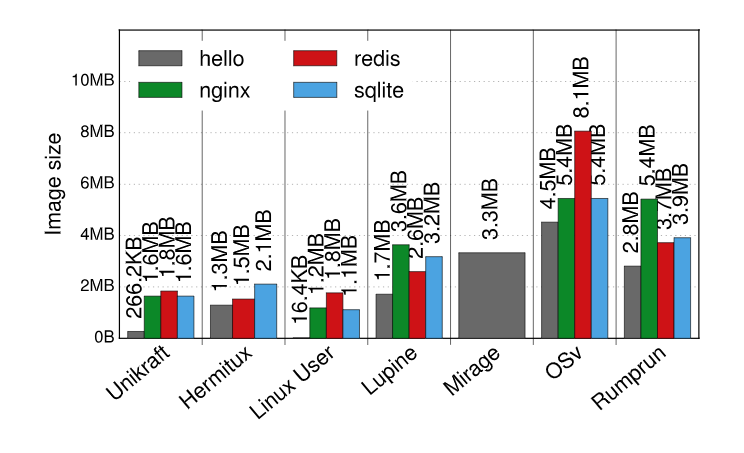

- Let’s start from image size - it stays < 2Mb for “real” apps, like redis or sqlite:

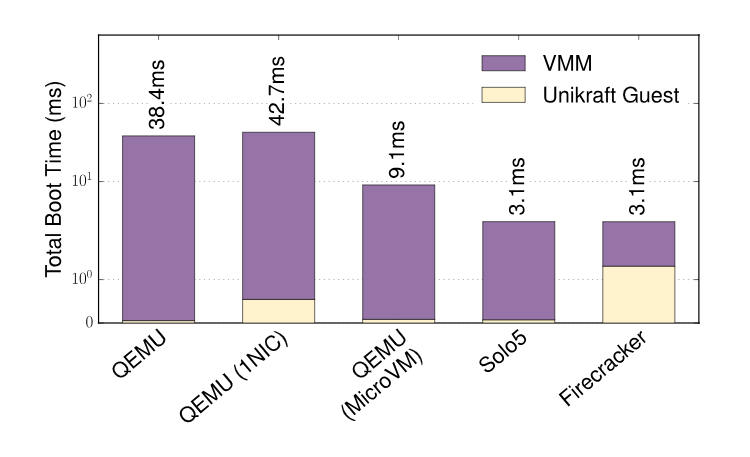

- Boot times using various hypervisors:

- Simple web app throughput:

- And a bit unexpected one - power consumption comparison (really cool though, may be useful for optimizing power/thermal efficiency for devices):

The common pattern we see in any implementation - your application still depend a lot how unikernel runtime component is implemented.

I think any of the above should work just nice if your application implements simple logic on network/disk, but not sure how easy it would be to adopt existing app with GPU/Special computing devices, which are quite common in ML world.

From the developer point of view, unicraft seem to be the most production-ready, and could be integrated in real development flow with least risk. Regarding ML - they also are on the way of adopting tensor computing frameworks like TF and torch - so fingers crossed.

Let’s run Rust unikernel!

Finally, just for fun let’s build Rust app unikernel via Hermit.

I’ve used m5zn.metal machine for experiments, since it’s possible to run type 1 hypervisor - it’s a real bare-metal aws server.

Install dependencies

Base:

sudo apt -y install build-essential

Rust:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

Then reload PATH:

source "$HOME/.cargo/bin"

NASM assembler:

sudo apt update

sudo apt -y install nasm

QEMU

Check that CPU support virtualization:

grep -E --color '(vmx|svm)' /proc/cpuinfo

If smth found - you’re ready to go.

After that check that KVM virtualization is supported by your OS:

sudo apt -y install cpu-checker

kvm-ok

The output should look like that:

INFO: /dev/kvm exists

KVM acceleration can be used

Install qemu, finally:

sudo apt -y install qemu qemu-kvm

This command then should return the qemu and kvm versions:

apt show qemu-system-x86

kvm -version

Rust bootloader

Clone rusty loader:

git clone https://github.com/hermit-os/loader.git

And compile it:

cargo xtask build --target x86_64

Here you can find flags for possible platforms.

You should use the following route to loader when using qemu (compiled binary will be in target/x86_64/).

Optionally, copy the compiled executable to /use/bin to be able to call it from anywhere in the system.

Build

Let’s build a simple http server, provided in hermit-rs.

You should first clone the project and go to the subfolder with httpd code.

And then build it:

cargo build \

-Zbuild-std=std,panic_abort \

--target x86_64-unknown-hermit \

--release

Run

Run and test built unikernel providing compiled bootloader and app:

qemu-system-x86_64 \

-cpu qemu64,apic,fsgsbase,fxsr,rdrand,rdtscp,xsave,xsaveopt \

-smp 1 -m 64M \

-device isa-debug-exit,iobase=0xf4,iosize=0x04 \

-display none -serial stdio \

-kernel hermit-loader \

-initrd target/x86_64-unknown-hermit/debug/httpd

Now just check the stdout ;)